Using Natural Language Processing to Understand Grey Areas in Brand Safety

by Sonja Kroll on 15th May 2018 in News

New technology may be the root of issues like fake news and malicious ads, but it is also helping publishers and advertisers take the next step forward in the battle to protect brands from unintentional misplacement of ads against inappropriate content, argues Anant Joshi (pictured below), CRO, Factmata, in this piece for ExchangeWire.

When news breaks of a major brand’s digital ads appearing alongside inappropriate or extremist content, there is an almost audible, collective sigh of relief from CMOs around the world rejoicing in the fact it is not them – this time. For brands, this is a nightmare of epic proportions because it erodes trust between consumers and brands.

As marketers, we must not allow fake news or malicious content to rule us

Anant Joshi, CRO, Factmata

Today, more than three-quarters (78%) of UK marketing heads say they have become more concerned about brand safety, according to research by Teads.

Brand safety is not something that can be ‘solved’; and thoughts of unintended misplacement of ads will always concern marketers. Advertisers, media agencies, and technology companies need to come together to protect consumers and businesses from malicious or misleading content online.

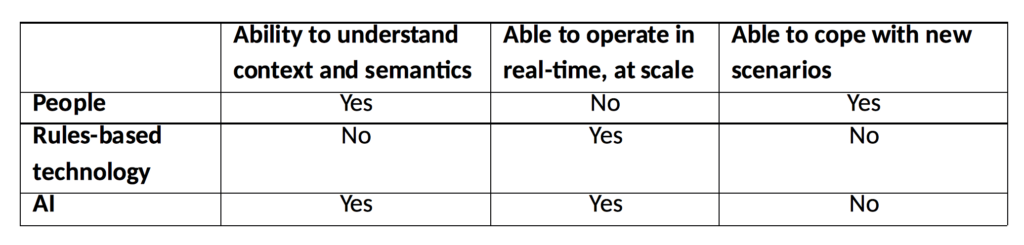

Broadly speaking, there are three ways to tackle the brand-safety problem: people, rules, and artificial intelligence (AI). Each of these methods has its advantages and disadvantages: people are capable of understanding context and semantics and can cope with new scenarios – but can’t operate in real-time, at scale. Rules-based technology can operate at scale, but cannot understand context and semantics or cope with unknown scenarios. AI is able to understand context and semantics and operate in real-time, at scale, but requires intervention to fully understand new scenarios and act appropriately.

When used together, AI, rules-based technology, and people have the power to detect and block even the most subtly inappropriate content, regardless of where on the web it appears.

Is it, or isn’t it?

While fake news continues to make global headlines, there are other forms of content that upset consumers and advertisers alike, but are harder to detect and classify.

Strongly opinionated content has the potential to be considered by some consumers and brands as hate speech, clickbait, or political propaganda, whilst others may find it palatable. This is known as a 'subjective grey area'. For example, in the U.S., right-wing supporters typically consider content from Fox News to be true and reliable, while those on the political left do not, instead preferring other news sources such as CNN.

Creating a quality and safety signal that is open and transparent, and identifies potentially undesirable content, places brands in control of how far into the grey area they wish to go. It puts brands in control of their own reputation and ensures they maximise performance within their own brand-safety tolerance level.

The ability to detect grey-area content will accelerate marketers’ attack on the bad actors that exist, and constant evolution driven by AI will enable technology to maintain pace with the untoward players that plague the industry.

How to identify the lesser-spotted grey areas

One of the ways to detect grey-area content is to manually evaluate the quality and credibility of content. Factmata’s Briefr product was developed in response to an increasing number of subtle, nuanced brand-safety incidents that were not picked up by existing technology. Using feedback from unique communities and cohorts of people, the technology classifies content in grey areas based on a human-derived understanding of the context, semantics, and any other nuances within content.

The data gathered from Briefr trains the AI to respond to similar scenarios resulting in rapid, automated detection of subtle, nuanced malicious sites and content. When placed in an advertising context, the data from Briefr enables companies to identify when their brands might appear in undesirable locations online and act accordingly.

Matt Harada, GM data and demand, Sovrn, said: “Sovrn is passionate about working with independent publishers of quality content. To offer further quality metrics to our buyers, we have chosen to work with Factmata to help build new whitelists of inventory that are free of hate speech, politically extreme, and fake/spoof content. This is a new offering in the programmatic advertising market; and Factmata is a strong partner in this space. We are excited to be part of Factmata’s journey to help indirect programmatic offer a cleaner, healthier environment for brands.”

Follow ExchangeWire