AI, Copyright & the Robots from 1994

by Mathew Broughton on 12th Aug 2025 in News

In this article, ExchangeWire research lead Mat Broughton takes a somewhat surrealist look at the house of cards underpinning AI data gathering, and what can be done to protect publishers.

Like watching gulls (or bulky drunk lads) fight over a chip, there’s something wonderful about watching companies throw down their legalese gloves and have a good old bareknuckle rumble out in public.

In the red corner this week, AI startup Perplexity, facing blows from its blue team opponent Cloudflare, an internet infrastructure firm. On 4th August, Cloudflare came out swinging, accusing Perplexity of “stealth” in ignoring robots.txt files, which signal whether a search engine or AI crawler is allowed to spider a site (more on that later though…).

Boo hiss boo. Another example of an absurdly-funded AI firm spitting in the face of copyright rules, harvesting everything across the web to the detriment of those who created that data in the first place?

For once, I’m not too sure. I’m not a shill for AI platforms in the slightest, and think they are getting away with murder when it comes to paying their way, especially towards the smaller local publishers which don’t get a crumb of the multi-billion dollar AI cake. However, the examples Cloudflare has provided are hardly a smoking gun.

“We conducted an experiment by querying Perplexity AI with questions about these domains, and discovered Perplexity was still providing detailed information regarding the exact content hosted on each of these restricted domains” [emphasis added]

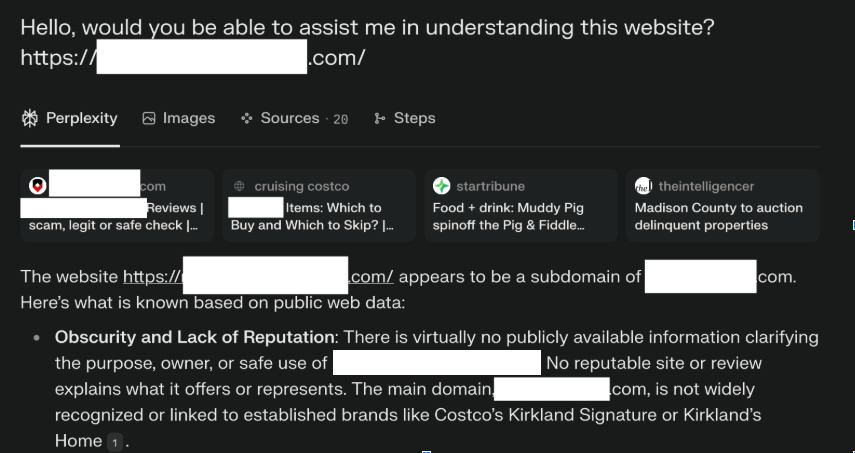

Here’s one such example:

Here's me attempting to find this "detailed information":

Cloudflare is, in and of itself, a commercial business with skin in the game. In July, it announced an update to its product which allows its customers to choose whether to block AI scrapers (a good idea in fairness, more vomitably summarised by its executives as “Content Independence Day”). But why Perplexity specifically? Aren’t all AI scrapers doing this sort of circumvention? Perhaps the most telling reason why Cloudflare has rounded on Perplexity, came clear a day later on 5th August, when it released a lovey-dovey soliloquy to Perplexity-rival OpenAI. Ick.

Moreover, it’s difficult to be outraged over this Perplexity case when Google still bundles its search and AI scrapers. If publishers don’t allow its AI scraper, you won’t be in AI overviews. Fair enough, however when Google pushes AI overviews more and more, to the detriment of indexed links, those publishers end up with zero traffic.

Stone age robots, bronze age problems

Cloudflare’s motivations and Google’s market power aside, the issue of AI scraping remains a serious one. With antiquated copyright laws ineffective at halting the mass devouring of data, and governments more sympathetic towards US AI firms than creatives and publishers alike, controlling AI scraping has largely fallen upon robots.txt.

Unlike Sir Killalot in Robot Wars however, these house robots are not able to control their arenas.

The core issue is that rules around robots.txt and donotcrawl are more of a gentleman’s agreement rather than unbreakable vows. A protocol from 1994 with seemingly no official stewardship. It’s a signal not to crawl, rather than an active system that blocks crawling.

A keep off the grass sign won’t stop moles digging up the lawn.

Flaws in robots.txt flew under the radar in the SEO age. When pages were simply being indexed by spiders, its only real use was to stop certain elements of a website, such as admin login pages, from being put up on search engines. Now, when more and more of a page is being pulled from the site and being presented as the AI’s own output, it’s a whopper of an issue, and is only getting worse.

So can the robots be beefed up? At present, not likely. One web standards body, the W3C, has faced recent criticism on its support of big tech policies to the detriment of independent players. In fairness, a community group on robots.txt was formed in February last year, with aims to renovate the protocol as it is “hard to maintain (almost a full time job right now) if you do not wish for your websites content to be applied for e.g. training AI”. The group closed in October, having failed to nominate a chair nor make a single subsequent post.

Another voluntary body, the Internet Engineering Task Force (IETF), put forward the widely-accepted standard of robots.txt in 2022. Promising sign that they could be the ones to update robots.txt, until reading the employer of each of the three engineers who forged those proposals:

- Google LLC

- Google LLC

- Google LLC

That seems likely then...

Protecting the publishers

Yes, the internet is a glorious hubbub of everything from cat videos, to booking your holiday, from breaking news, to how to’s. And a major part of that wonderful mishmash is its decentralised nature, built on commonly-agreed standards. However if those standards are now being mandated by single private companies, or industry bodies hijacked by the largest of corporations, who protects that?

Though AI licensing agreements are starting to see coins come back into the pockets of publishers, these are all on the upper end of the size spectrum. Smaller publishers, those most exposed to having their human-written, original work nabbed, are still left with nothing, thus damaging the internet as a whole. A zoo with the kangaroo, but not the koala, is a poorer one.

Promising initiatives are starting to emerge, most notably the LLM Content Ingest API project from the IAB Tech Lab, which proposes a cost per crawl monetisation opportunity alongside stronger controls for LLM/AI bot access. However, these urgently need to be actioned, and supported by stronger copyright legislation, for the supply chain to keep its supply side.

Follow ExchangeWire