Brand Safety in the Privacy-First Era

by Mathew Broughton, Grace Dillon on 28th Jul 2021 in News

In an 18-month period punctuated by a global virus; an especially vitriolic US election which preceded an attack on the Capitol building; and the lumbering boulder of Brexit, concerns over brand safety have understandably been high. While the term “fake news” is now enough to induce Thomas the Tank Engine-levels of eye rolling, awareness of disinformation is also reaching new highs, while at the same time continuing to thrive.

The damage to a brand’s reputation from safety violations goes far beyond raising a few titters from the user and the potential to star in Private Eye’s “Malgorithms” section. A 2018 study ascertained that consumers express 2.8 times less intent to engage with a brand if their ad appeared next to unsuitable content. At the time of the report, a common conception amongst consumers was that the appearance of these ads indicated the brands’ endorsement of the content.

In this in-depth feature article, ExchangeWire editors Mat Broughton and Grace Dillon examine the roles each market participant plays in upholding brand safety, how emerging channels are altering the safety and suitability landscapes, and the role of ad tech in quashing disinformation.

Publisher, platform, and brand: brand safety standards across the supply chain

Perhaps the most notable incidents of brand safety violations took place across Google’s portfolio, most notably YouTube, throughout 2017, in which ads from multiple brands were shown against offensive content including terrorism promotion, antisemitism, and homophobia. This forced the withdrawal of brands including AT&T; Johnson & Johnson; L’Oreal; and Verizon, among others. While many of these have since returned to the platform, YouTube’s UK marketing director Nishma Robb subsequently admitted in 2019 that achieving 100% brand safety is not the “reality of our platform”, following an investigation which revealed a host of inappropriate comments on innocuous videos of children. While this is certainly a pragmatic viewpoint, a platform which generates USD$6bn (£4.3bn) in ad revenue per quarter, and is backed by the resources of Google, surely has the responsibility towards its brand partners, and more importantly the general public, to at least aim for the full monty of 100% brand safety. Particularly when it moves to implement adverts on all channels, not just approved partners.

While not every platform and publisher can match the scale of YouTube, these providers carry the same role in protecting their partners from appearing against unsafe, or unsuitable, content. The complex case above, and last year’s incidents in which ads were unnecessarily blocked against coronavirus-related content, again throws up issues of brand safety systems, which often rely on standards imposed by the advertisers themselves, which may be based upon broad keyword-based measures, excluding context. In recent years, brand safety and suitability have typically been hand-in-hand with ad fraud and viewability solution providers, however it will be interesting to note whether the greater prominence of context-based advertising solutions will affect this dynamic. Regardless, platforms, publishers, and tech providers, should aim to review both their and their partner’s safety standards to ensure that ads are not being placed in inappropriate environments, as well as not losing revenue from overzealous suitability blocking.

While platforms and publishers have a critical role in upholding brand safety and suitability, the importance of brands and agencies in monitoring the placements of their ads cannot be understated. One brand to find itself in hot water in more recent years was video games publisher Gaijin Entertainment. At the start of the year, the Hungary-based firm was accused of actively supporting the self-styled Donetsk People’s Republic (DPR) dissident group in the disputed Ukrainian province of Donechyna, when integrated advertising appeared within a YouTube channel showing illegal weapons tests within the area. Gaijin Entertainment refuted these claims, stating that the placements were ordered by their (unnamed) agency, without their prior knowledge.

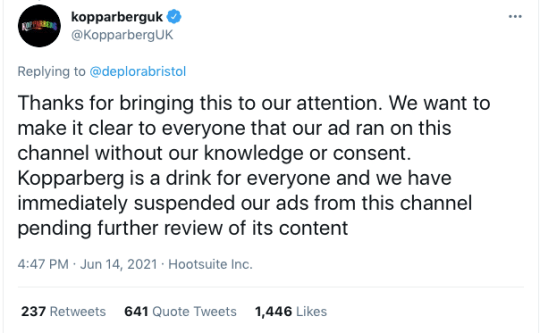

This apparent lack of awareness by brands of where their ads are being placed has come to the forefront in recent weeks, when a host of brands including Ikea; Grolsch; Kopparberg; The Open University; and Ovo Energy, pulled their ads from fledgling right-wing news channel GB News, all claiming they’d been blindsided... by the appearance of their own ads.

Various reasons were cited by the brands as to why their ads were placed on the channel without their consent, ranging from laying the blame at the door of their media agency to media buying algorithms. Continued misconceptions over a trade-off between brand safety and campaign effectiveness may also be an explanatory factor as to why these brands were not on top of their media buying, as evidenced by a 2018 cross-Atlantic study conducted by now-defunct Sizmek suggesting that 64% of marketers hold the opinion that achieving full brand safety comes at the expense of their campaign performance.

While platforms and publishers cannot shirk from their role of upholding brand safety standards in their corridors, brands and agencies also need to keep tabs of their own advertising efforts, at the bare minimum. If marketers aren’t aware of their own ads running in the (comparatively) limited pool of UK broadcast television stations, what does this suggest about their knowledge of where their ads are running in the nebulous web ecosystem? This will become even more critical in the new privacy-focused era, where contextual relevance carries greater weight, particularly within the open web.

Brand safety in emerging channels

At a time when concerns are still prevalent on established forms of digital media, it is therefore no surprise that emerging channels, such as audio, CTV, DOOH, and gaming are highly vulnerable to brand safety violations. Developing effective technology solutions and promoting greater transparency across the supply chain could prove vital in upholding brand safety standards within emerging channels, as proposed by Ross Nicol, VP EMEA, Zefr. Nicol writes, "Marketers are looking for greater transparency around where their ads will appear on emerging channels, both in terms of first-party data controls and from their third-party technology partnerships. As emerging channels are filled with an increasing variety of media formats – such as video, images and audio – it's clear that there needs to be a common rubric to help the buy-side safely navigate and understand the suitability of each when considering where to place their ads.

“Standards from the Global Alliance for Responsible Media (GARM) are being adopted by major platforms and agencies and will go a long way towards promoting consistency and helping to speed up the process of minimising ad adjacency against harmful content. However, the question still stands, can the brand safety tech keep up?”

One of the likely many potential answers to this question may lie in the avenue already being explored in targeting, namely contextual. These have become sophisticated enough to analyse far more than just text (audio, videos frame-by-frame). This means that they can assess a far greater range of data points to ensure that an ad is placed in a suitable, brand safe environment. These can also be applied across the supply chain. On the buy side, marketers can revise and refine their brand suitability criteria and adapt their contextual targeting capabilities to their new standard. Meanwhile, publishers and platforms can employ more sophisticated contextual solutions, beyond keyword potshotting, to highlight potentially illegal content.

The role of ad tech in fighting disinformation

One of the new ways in which ad tech, and the wider digital media industry as a whole, has fallen under the spotlight is the way in which it fights, or indeed fuels, disinformation. While its more catchy moniker “fake news” rose to prominence in a political context via sunset-coloured lips, false information about the various Covid-19 vaccinations has brought disinformation into unpleasant focus in recent months. In the last few days, current US President Joe Biden launched a scathing attack on Facebook, saying on its failure to combat false information on Covid vaccines on its platform, “They’re killing people - I mean they’re really, look, the only pandemic we have is among the unvaccinated.”

Facebook’s failings to appropriately police its platform was earlier highlighted in a scathing internal memo published by data scientist Sophie Zhang, with one example including a nine month delay by FB executives to act on a sustained campaign designed to artificially boost the prospects of Honduran president Juan Orlando Hernandez, with bots returning a mere two weeks after being removed. It is important to stress that this is not an issue exclusive to Facebook, and the nebulous nature of the programmatic ecosystem mandates that a collaborative approach is needed to stamp out disinformation. As per Věra Jourová, Vice President for Values and Transparency, European Commission, speaking on the current EU framework on combatting disinformation, “A new stronger Code is necessary as we need online platforms and other players to address the systemic risks of their services and algorithmic amplification, stop policing themselves alone and stop allowing to make money on disinformation, while fully preserving the freedom of speech.”

The current flimsy self-policing of the industry has not gone unnoticed by the regulators, with the EU announcing in May that it is set to strengthen its code of practice, with the new version finalised by September. Though this is to be made more robust, four out of the five major platforms (Google, Facebook, Microsoft, Twitter, Tik Tok) to have signed up to the code have not lived up to their commitments, according to Thierry Breton, Commissioner for Internal Market. Why should that change if the code remains voluntary? The bolstering of the code is seen as a partial precursor to the Digital Services Act, which is expected midway through 2022 at the earliest. Though this is, in theory, more enforceable given its legislative nature, rather than voluntary, some elements of the ostensibly similar GDPR have not been acted upon (right Mr. Schrems?).

"Industry players – publishers, tech platforms, advertisers and even governments – must start speaking up"

As with the brand safety issues discussed earlier, it is the role of all parties within the ecosystem to combat disinformation, a viewpoint supported by Richard Reeves, Managing Director at AOP. Reeves comments, “Battling misinformation and safeguarding trust rank high on publishers’ agendas, but the responsibility shouldn’t lie on one industry player – it's a battle the entire industry must fight together. It’s a sentiment echoed by consumers too, who – when surveyed by the Trustworthy Accountability Group – said that brand safety responsibility should lie equally across all industry players including advertisers (52%), agencies (56%), technology providers (47%), and publishers (54%).

“Trust and brand safety go hand-in-hand, and of course we’re all aware of the importance of advertising in a trusted environment and the potential pitfalls of programmatic trading. But the argument falls down when it comes to premium publishers. They in their very nature are brand-safe and fully accountable – and always have been. Despite this, they have fully engaged with industry-wide calls for implementing greater standards, even though they themselves are already operating at a far higher level. The result? Premium publishers are now suffering through the use of blunt, clumsy, brand-safety tools, imposed on them to supposedly ‘improve’ the ecosystem. Now they suffer the negative consequences such as misfiring reporting pixels and page latency – damaging both revenue streams and the user experience.

“We fully acknowledge there is no one quick and easy fix to upholding brand safety and fighting disinformation – and we all have a role to play in facilitating change. Industry players – publishers, tech platforms, advertisers and even governments – must start speaking up, asking the important questions and taking accountability to address these issues.”

Big TechBrand SafetySocial Media

Follow ExchangeWire