How Can Brands Become Visible in the New Era of LLM Search?

by on 16th Jan 2026 in News

How important is brand perception? We sat down with Jellyfish's product director Frederic Derian and Victor Batista to discuss the rise of LLMs and what this means for advertisers.

As chatbots assume a dominant spot in consumers’ search habits, brands and advertisers are navigating new challenges. They come face to face with a transformed landscape: SEO fades into the background, while attribution and conversion become increasingly harder to track causing a great deal of measurable engagement to be lost.

Amid the change, how can brands stay visible? According to Jellyfish, leveraging brand perception through AI is the way. They claim to be the first company to leverage AI brand perception from AI models to automatically generate advertising optimisations through its Share of Model proprietary tool.

Frederic Derian, Jellyfish’s product director, talked us through the tool. To sum it up, it helps their clients “to be more searchable, visible, and shoppable in the generative search ecosystem.” The tool is directly connected to LLM models including ChatGPT and Gemini, which allows it to carry out constant analysis of consumers’ perceptions for brands.

Harnessing LLMs’ perception of a brand

There’s a lot that can be gained from using AI to automatically generate optimisations in ad campaigns. Jellyfish’s senior media director Victor Batista highlighted the benefits. “We can tap into specific segments of the market, or specific sentiments or keywords for example, that Google or the platforms themselves might not have identified yet, to serve ads against,” he told us.

“Most businesses are going to have keywords or targets within their campaigns that are focused on their own value propositions or perceptions, but what we get in addition when we run the brand through something like Share of Model, is that we can see how the LLMs perceive a brand,” Batista explained.

He gave the example of an insurance company: the tool tells them the brand is recommended for someone who requires high value insurance, because they offer the best comparable coverage and benefits. “The brand may not actually be going for that, because they’re working with a value proposition of raw value,” Batista expanded. “If Share of Model recommends we target keywords focused on luxury goods insurance for example, that we might not have considered – the benefit of piping that directly is that we then construct our campaigns to target those specific terms.”

They’ve seen an increase not only in traffic to their clients’ sites, but traffic that actually converts. Batista attributed this to unearthing pockets of performance that haven’t yet been tapped into by either them or their competitors. “It’s actually cheaper to play in that space, rather than going after broader concepts, as most brands do in general,” he added.

According to Batista, the process is a self-fulfilling cycle. “The perception of the model is based on the perception of the people, which are the consumers, whose perceptions are analysed. Then you also have other groups of people being influenced by the model, which is then influenced by their change…” He told us that they want to ensure they are tapping into the area in which they have direct insight, which is the model, and adding that to what they already know about their brands and consumers.

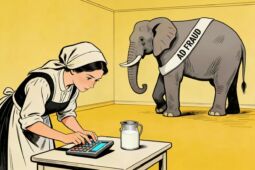

Are there any risks?

“Everyone knows that LLMs and AI can hallucinate,” Derian acknowledged. So, what is being done to minimise risks?

One of the ways they are optimising their algorithm involves ensuring consistency with brands’ guidelines, which are accessible through the client’s Google Ads account. Iterative refinement is also used to generate relevant recommendations; it ensures that the output will be relevant.

Importantly, no changes are applied without verification from a human team member. The model may recommend certain adjustments which are not in line with a brand’s messaging, but these types of suggestions would not be applied without human approval. Batista expanded on why the tool might recommend something which is not consistent with a brand’s messaging: “It’s valuable to have the insight, especially since it can influence wider correction in the brand’s strategy, if desired.”

We wanted to know more about what goes on behind the scenes, and how the team can ensure that the model does not act on any biases it may have.

They explain that the tool’s brand perception technology is based on thousands of prompts, and the answers of users’ queries. Based on the large amount of data, they are able to conduct semantic analysis, monitoring changes over time and the fluctuation of strengths and weaknesses.

Interestingly, Derian also noted that there are differences across different LLMs. “Brand perception itself will fluctuate from one model to another because sources used by each model are different. So yes, we observe a lot of differences. This is one of the key insights accessible on the platform.”

The future of brand perception analysis

What is the future of brand perception analysis? Derian pointed to the way AI search engines and chatbots are evolving to become e-commerce tools. “It will affect the way brands sell products,” he said. He believes this evolution will create many opportunities within the field of product optimisation and product visibility tracking.

Batista also reflected on the way AI is transforming e-commerce. With models directing consumers on what purchases to make, he maintained that brand should be working with tools to understand the conversation. He added, “If you're not, then you're missing out on potential visibility because the SERP is no longer the one-stop shop it used to be for research.”

Follow ExchangeWire